CoSQL

1.0

CoSQL

1.0

Nov 12, 2024: We have released Spider 2.0 full paper, data and code. Follow the guideline to submit your scores to the leaderboard!

Aug 28, 2024: The early access version of Spider 2.0 (a more realistic and challenging text-to-SQL task) is now available! We expect to release the whole dataset in 1-2 weeks. As this is a preliminary release, there may be errors. Your feedback would be invaluable in refining the dataset!

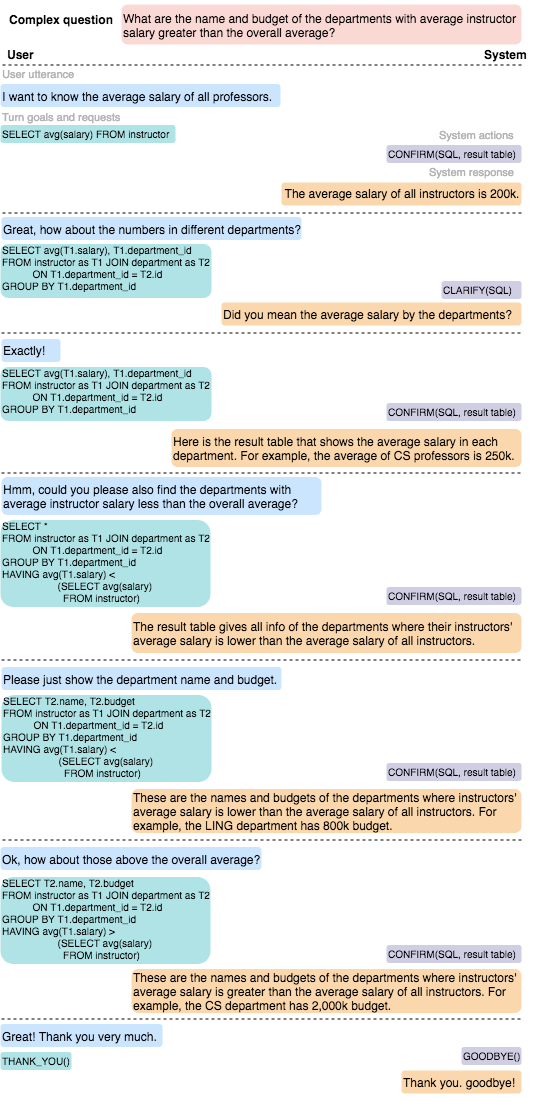

INFORM_SQL label given the interaction context and the DB schema. Comparing to other context-dependent text-to-SQL tasks such as SParC, the DST task in CoSQL also includes the ambiguous questions if the user affirms the system clarification of them. In this case, the system clarification is also given as part of the interaction context to predict the SQL query corresponding to the question. As in Spider and SParC tasks, we report results of Exact Set Match without Values:

| Rank | Model | Question Match | Interaction Match |

|---|---|---|---|

|

1 Feb 14, 2022 |

STAR

Alibaba DAMO & SIAT (Cai and Li et al., EMNLP-Findings '22) code demo |

57.8 | 28.2 |

|

2 Apr 2, 2022 |

CQR-SQL

Tencent Cloud Xiaowei (Xiao et al.,'22) |

58.3 | 27.4 |

|

3 Jun 4, 2022 |

RASAT + PICARD

SJTU LUMIA & Netmind.AI (Qi et al., EMNLP'22) code |

55.7 | 26.5 |

|

4 Dec 26, 2022 |

MT Training + N-best List Rerankers + PICARD

Alexa AI (Parthasarathi et al., ICASSP'23) |

55.8 | 24.8 |

|

5 Oct 5, 2021 |

HIE-SQL + GraPPa

Alibaba DAMO (Zheng et al. ACL-Findings '22) |

53.9 | 24.6 |

|

6 Jul 14, 2021 |

T5-3B+PICARD

Element AI, a ServiceNow company (Scholak et al., EMNLP'21) code |

54.6 | 23.7 |

|

7 Jan 7, 2022 |

RATSQL++ + ELECTRA

Anonymous |

53.8 | 22.1 |

|

8 Sep. 21, 2020 |

RAT-SQL + SCoRe

Yale & Microsoft Research & PSU (Yu et al. ICLR '21) |

51.6 | 21.2 |

|

9 Aug 24, 2020 |

R²SQL + BERT

Alibaba DAMO (Hui et al. AAAI '21) code |

46.8 | 17.0 |

|

10 Jan 26, 2021 |

IST-SQL + BERT

University of Science and Technology of China (Wang et al. AAAI '21) code |

41.8 | 15.2 |

|

11 Nov. 16, 2020 |

WaveSQL + BERT

Anonymous |

46.1 | 15.1 |

|

12 May 26, 2020 |

IGSQL + BERT

Peking University (Cai et al. EMNLP '20) code |

42.5 | 15.0 |

|

13 Aug 30, 2019 |

EditSQL + BERT

Yale University & Salesforce Research (Zhang et al. EMNLP '19) code |

40.8 | 13.7 |

|

14 May 21, 2020 |

GAZP + BERT

University of Washington & Facebook AI Research (Zhong et al., EMNLP '20) |

39.7 | 12.8 |

|

15 Apr 21, 2021 |

MemCE

UoE (Jain et al., TACL '21) |

28.4 | 6.2 |

|

16 Aug 30, 2019 |

CD-Seq2Seq

Yale University & Salesforce Research (Yu et al. EMNLP '19) code |

13.9 | 2.6 |

|

17 Aug 30, 2019 |

SyntaxSQL-con

Yale University (Yu et al. EMNLP '18) code |

14.1 | 2.2 |

| Rank | Model | Question Match | Interaction Match |

|---|---|---|---|

|

1 Jun 4, 2022 |

RASAT + PICARD

SJTU LUMIA & Netmind.AI (Qi et al., EMNLP'22) code |

66.3 | 37.4 |

|

2 May 21, 2020 |

GAZP + BERT

University of Washington & Facebook AI Research (Zhong et al., EMNLP '20) |

35.9 | 8.4 |

INFORM_SQL. It considers a SQL query, the execution result, and the DB schema. Preserving logical consistency (Logic Correctness Rate (LCR)) between SQL and NL response is crucial in this task, in addition to naturalness and syntactical correctness.

| Rank | Model | BLEU | Grammar | LCR (%) |

|---|---|---|---|---|

|

1 Dec 15, 2022 |

Complexity Aware Prompts + T5

Alexa AI |

28.1 | - | - |

|

2 Aug 30, 2019 |

Template baseline | 9.3 | 4.0 | 41.0 |

|

3 Aug 30, 2019 |

Pointer-generator baseline | 15.1 | 3.6 | 35.0 |

|

4 Aug 30, 2019 |

Seq2Seq baseline | 14.1 | 3.5 | 27.0 |

INFORM_SQL.

| Rank | Model | Accuracy |

|---|---|---|

|

1 Dec 20, 2019 |

UTran-SQL | 87.2 |

|

2 Aug 30, 2019 |

TBCNN-pair baseline | 83.9 |

|

3 Aug 30, 2019 |

Majority baseline | 62.8 |